Hi, I'm

Manish Ram

Chief Scientist

at Parafield

I specialize in Machine Learning in Health, Reinforcement learning, Spatial Audio Generation, and

Building World Models

My Projects

Projects that I Love Building

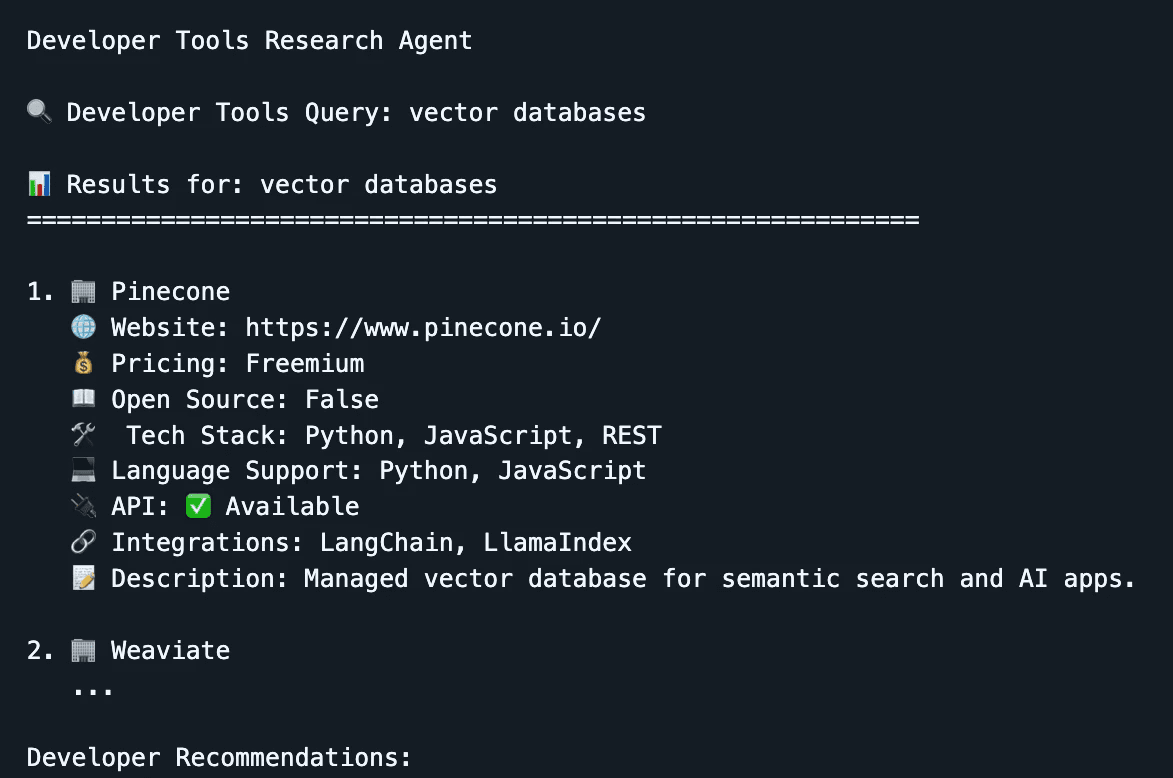

TL;DR

Python

PyTorch

AI/ML

Undergraduate Researcher

DAIS (UW)

2024 - Current

Undergraduate Researcher

DAIS (UW)

2024 - Current

Student Reseacher

MedArc

2025 - Current

Student Reseacher

MedArc

2025 - Current

IT - Lab Lead

Bellevue College

2023 - Current

IT - Lab Lead

Bellevue College

2023 - Current

Work Experience

DAIS - Undergraduate Researcher

• Implemented diffusion transformer (DiT) architectures in PyTorch and TensorFlow to denoise and reconstruct 3D cryo-EM density maps, improving voxel-level fidelity by 15% over baseline DDPM approaches. • Integrated self-attention mechanisms within the reverse diffusion pipeline to better capture long-range dependencies in volumetric data. • Scaled DiT model training across multi-GPU environments using PyTorch Distributed Data Parallel (DDP), reducing training time by 40% while maintaining model convergence. • Designed and optimized custom beta schedules (linear, cosine, and quadratic) for diffusion training, leading to faster convergence and more stable reconstructions

DAIS - Undergraduate Researcher

• Implemented diffusion transformer (DiT) architectures in PyTorch and TensorFlow to denoise and reconstruct 3D cryo-EM density maps, improving voxel-level fidelity by 15% over baseline DDPM approaches. • Integrated self-attention mechanisms within the reverse diffusion pipeline to better capture long-range dependencies in volumetric data. • Scaled DiT model training across multi-GPU environments using PyTorch Distributed Data Parallel (DDP), reducing training time by 40% while maintaining model convergence. • Designed and optimized custom beta schedules (linear, cosine, and quadratic) for diffusion training, leading to faster convergence and more stable reconstructions

Med Arc - Student Reseacher

• Contributing to fMRI foundation models using masked autoencoding to learn Image Embeddings from brain imaging data using ViT (Vision Transformers) with data preprocessing, normalization, and augmentation • Implemented network masking and visualization techniques for the Yeo et al. (2011) 7 resting-state networks, enhancing interpretability of brain activity patterns • Developed Inverse Block Masking strategies for brain modeling to improve spatial context capture. • Built lightweight debugging and pretraining scripts optimized for CPU, enabling rapid performance checks and visualization of training dynamics

Med Arc - Student Reseacher

• Contributing to fMRI foundation models using masked autoencoding to learn Image Embeddings from brain imaging data using ViT (Vision Transformers) with data preprocessing, normalization, and augmentation • Implemented network masking and visualization techniques for the Yeo et al. (2011) 7 resting-state networks, enhancing interpretability of brain activity patterns • Developed Inverse Block Masking strategies for brain modeling to improve spatial context capture. • Built lightweight debugging and pretraining scripts optimized for CPU, enabling rapid performance checks and visualization of training dynamics

Skills & Tools

ML and SWE Tools

PyTorch

PyTorch

PyTorch

ML Flow

ML Flow

Branding

Branding

SHAP

SHAP

Apache Spark

Apache Spark

LangChain

LangChain

SQL

C/C++

Computer Vision

DeepLearning

Contrastive Learningg

CUDA

CI/CD Pipelines

Hi, I'm

Manish Ram

Chief Scientist

at Parafield

I specialize in Machine Learning in Health, Reinforcement learning, Spatial Audio Generation, and

Building World Models

My Projects

Projects that I Love Building

TL;DR

Python

PyTorch

AI/ML

Undergraduate Researcher

DAIS (UW)

2024 - Current

Undergraduate Researcher

DAIS (UW)

2024 - Current

Student Reseacher

MedArc

2025 - Current

Student Reseacher

MedArc

2025 - Current

IT - Lab Lead

Bellevue College

2023 - Current

IT - Lab Lead

Bellevue College

2023 - Current

Work Experience

DAIS - Undergraduate Researcher

• Implemented diffusion transformer (DiT) architectures in PyTorch and TensorFlow to denoise and reconstruct 3D cryo-EM density maps, improving voxel-level fidelity by 15% over baseline DDPM approaches. • Integrated self-attention mechanisms within the reverse diffusion pipeline to better capture long-range dependencies in volumetric data. • Scaled DiT model training across multi-GPU environments using PyTorch Distributed Data Parallel (DDP), reducing training time by 40% while maintaining model convergence. • Designed and optimized custom beta schedules (linear, cosine, and quadratic) for diffusion training, leading to faster convergence and more stable reconstructions

DAIS - Undergraduate Researcher

• Implemented diffusion transformer (DiT) architectures in PyTorch and TensorFlow to denoise and reconstruct 3D cryo-EM density maps, improving voxel-level fidelity by 15% over baseline DDPM approaches. • Integrated self-attention mechanisms within the reverse diffusion pipeline to better capture long-range dependencies in volumetric data. • Scaled DiT model training across multi-GPU environments using PyTorch Distributed Data Parallel (DDP), reducing training time by 40% while maintaining model convergence. • Designed and optimized custom beta schedules (linear, cosine, and quadratic) for diffusion training, leading to faster convergence and more stable reconstructions

Med Arc - Student Reseacher

• Contributing to fMRI foundation models using masked autoencoding to learn Image Embeddings from brain imaging data using ViT (Vision Transformers) with data preprocessing, normalization, and augmentation • Implemented network masking and visualization techniques for the Yeo et al. (2011) 7 resting-state networks, enhancing interpretability of brain activity patterns • Developed Inverse Block Masking strategies for brain modeling to improve spatial context capture. • Built lightweight debugging and pretraining scripts optimized for CPU, enabling rapid performance checks and visualization of training dynamics

Med Arc - Student Reseacher

• Contributing to fMRI foundation models using masked autoencoding to learn Image Embeddings from brain imaging data using ViT (Vision Transformers) with data preprocessing, normalization, and augmentation • Implemented network masking and visualization techniques for the Yeo et al. (2011) 7 resting-state networks, enhancing interpretability of brain activity patterns • Developed Inverse Block Masking strategies for brain modeling to improve spatial context capture. • Built lightweight debugging and pretraining scripts optimized for CPU, enabling rapid performance checks and visualization of training dynamics

Skills & Tools

ML and SWE Tools

PyTorch

PyTorch

PyTorch

ML Flow

ML Flow

Branding

Branding

SHAP

SHAP

Apache Spark

Apache Spark

LangChain

LangChain

SQL

C/C++

Computer Vision

DeepLearning

Contrastive Learningg

CUDA

CI/CD Pipelines

Hi, I'm

Manish Ram

Chief Scientist

at Parafield

I specialize in Machine Learning in Health, Reinforcement learning, Spatial Audio Generation, and

Building World Models

My Projects

Projects that I Love Building

TL;DR

Python

PyTorch

AI/ML

Undergraduate Researcher

DAIS (UW)

2024 - Current

Undergraduate Researcher

DAIS (UW)

2024 - Current

Student Reseacher

MedArc

2025 - Current

Student Reseacher

MedArc

2025 - Current

IT - Lab Lead

Bellevue College

2023 - Current

IT - Lab Lead

Bellevue College

2023 - Current

Work Experience

DAIS - Undergraduate Researcher

• Implemented diffusion transformer (DiT) architectures in PyTorch and TensorFlow to denoise and reconstruct 3D cryo-EM density maps, improving voxel-level fidelity by 15% over baseline DDPM approaches. • Integrated self-attention mechanisms within the reverse diffusion pipeline to better capture long-range dependencies in volumetric data. • Scaled DiT model training across multi-GPU environments using PyTorch Distributed Data Parallel (DDP), reducing training time by 40% while maintaining model convergence. • Designed and optimized custom beta schedules (linear, cosine, and quadratic) for diffusion training, leading to faster convergence and more stable reconstructions

DAIS - Undergraduate Researcher

• Implemented diffusion transformer (DiT) architectures in PyTorch and TensorFlow to denoise and reconstruct 3D cryo-EM density maps, improving voxel-level fidelity by 15% over baseline DDPM approaches. • Integrated self-attention mechanisms within the reverse diffusion pipeline to better capture long-range dependencies in volumetric data. • Scaled DiT model training across multi-GPU environments using PyTorch Distributed Data Parallel (DDP), reducing training time by 40% while maintaining model convergence. • Designed and optimized custom beta schedules (linear, cosine, and quadratic) for diffusion training, leading to faster convergence and more stable reconstructions

Med Arc - Student Reseacher

• Contributing to fMRI foundation models using masked autoencoding to learn Image Embeddings from brain imaging data using ViT (Vision Transformers) with data preprocessing, normalization, and augmentation • Implemented network masking and visualization techniques for the Yeo et al. (2011) 7 resting-state networks, enhancing interpretability of brain activity patterns • Developed Inverse Block Masking strategies for brain modeling to improve spatial context capture. • Built lightweight debugging and pretraining scripts optimized for CPU, enabling rapid performance checks and visualization of training dynamics

Med Arc - Student Reseacher

• Contributing to fMRI foundation models using masked autoencoding to learn Image Embeddings from brain imaging data using ViT (Vision Transformers) with data preprocessing, normalization, and augmentation • Implemented network masking and visualization techniques for the Yeo et al. (2011) 7 resting-state networks, enhancing interpretability of brain activity patterns • Developed Inverse Block Masking strategies for brain modeling to improve spatial context capture. • Built lightweight debugging and pretraining scripts optimized for CPU, enabling rapid performance checks and visualization of training dynamics

Skills & Tools

ML and SWE Tools

PyTorch

PyTorch

PyTorch

ML Flow

ML Flow

Branding

Branding

SHAP

SHAP

Apache Spark

Apache Spark

LangChain

LangChain

SQL

C/C++

Computer Vision

DeepLearning

Contrastive Learningg

CUDA

CI/CD Pipelines